When you go to your insert-search-application-name-here enter a query and hit the search button, what exactly are you searching on?

One of the hardest things to do in IT (or in any field, really) is to sometimes take a step back and look at the basics, at the foundational knowledge behind some things that we may use every day without necessarily understanding how they really work.

After realizing I’ve been having the same conversation with different customers/students to explain these same main concepts over the last few years (both at FAST and now at cXense), I decided to explain a little bit about these 4 essential concepts here:

- Type of query (AND, PHRASE, OR)

- Where to search (all fields, body, title, etc.)

- Field Importance

- Sorting

Type of Query

The first thing you have to think about when constructing your search interface is: how do I want the system to match the text/query specified by the user?

To answer this question you must understand the differences in each of the following three search requests explained below.

Obs.: Note that the examples below are query language-agnostic, so just replace them with whatever is the proper syntax for the search engine you are using. Even though the syntax may change, the concepts should remain the same.

AND query

Example: venture AND capital

This query above will match only documents that contain all terms in the query, which in this case means that any document, in order to be returned, must have both the term venture as well as the term capital. Those terms can be found together (e.g. “raised more venture capital money…”), separately (e.g. “is initiating a new venture with capital raised…” or even in a different order (e.g. “for his new venture he raised capital from…”).

This is the most common operator used across search applications and also the default operator in many search platforms (e.g. FAST ESP, FS4SP, cX::search).

PHRASE query

Example: “venture capital”

This query above, in contrary to the AND query, will only return documents that contain this exact phrase. This means that a document with a text like “raised more venture capital money…” will match, but a document with “is initiating a new venture with capital raised…” will not (due to the fact that there is an extra term – with – in between the two required terms).

This is an operator often used behind the scenes by search applications whenever a user puts some text in between quotes into the search box. It’s very useful for scenarios where the user is trying to find some exact phrase he/she is looking for.

OR query

Example: venture OR capital

This last query is the most open of all, as it will return documents that contain any of the terms in the query. With this query, a document only needs to have the term venture or capital to be returned, without the need for having both (as it was the case with the AND and PHRASE queries). This means that a document with the text “he decided to venture down the hall…” will be returned, as well as a document with the text “Brasília is the federal capital of Brazil”.

Where to search

Now that you have decided what type of queries you want to execute (and, phrase, or), the next step is to decide where do you want this search to occur. When asked “where do you want to search?” people usually reply with “everywhere, of course!”. Yet it is important to step back and think if that’s really what you want.

Imagine you go to your search application and type “financial systems” (with/without the quotes) and click the search button, what will happen then? Where in the document do you believe this query will try to find the terms financial and systems?

The answer to these questions depends heavily on which search technology you are using behind the scenes:

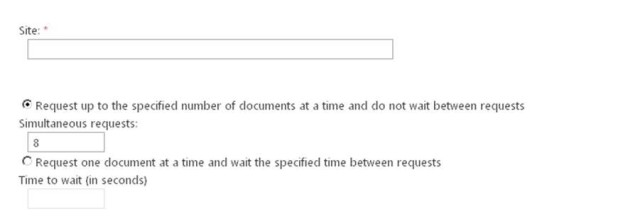

- in FAST ESP – this would be a query against the default composite field, which out-of-the-box would be comprised of fields such as body, title, url, keywords, etc.

- in FAST Search for SharePoint – this would be a query against the fulltext index, which by default contains fields such as title, author, body, etc.

- in cX::search – this would be a query against all searchable fields in the index

In the case of cX::search, if you do not define exactly which fields should be searched on, by default the search will be executed against all the searchable fields in the index. This means that cX::search will look for the terms financial and systems in the fields title and body, but also in fields such as category, related_content, or even unitsInStock which may not be exactly what you are looking for.

When I was teaching FAST Search for SharePoint, the main confusion for students was the fact that the default search was not across ALL fields, but instead just a subset of them, which meant that for every new managed property that you wanted to search by default (just by typing some terms in the search box, that is) you needed to make sure to add it to the fulltext index as well.

As you can see, even such a simple question can have very distinct answers depending on which search platform you are using, so the best way to avoid future problems is to first understand exactly how your specific search platform handles the default queries, and then use this knowledge to control exactly which fields you want to search on by default.

For cX::search, for example, this could be done by adding the desired list of fields before the query term:

?p_aq=query(title,body,description,tags,url,author:"financial systems", token-op=and)

In the example above we are being very clear about which fields should be used when looking for the query terms defined by the user, which makes it a lot easier to debug and answer questions like “why was this document returned in the results?”.

Field Importance

By now should know how you want to search (and, phrase, or) and also where to search (title, body, etc.), so it’s time to decide which fields matter more to you among all the ones that were selected to be searched in the previous step. As a starting point, take look at these document examples below:

Document 1

Title: Market Research Findings – 2012

Description: This document summarizes the findings from the 2012 market research study…

Tags: research, 2012

Document 2

Title: About the market crash of 1929

Description: All the available research on the market crash of 1929…

Tags: stock, market, 1929

Document 3

Title: XYZ begins to explore new market

Description: After a few years focused on research, company XYZ began exploring a new market…

Tags: XYZ

And now consider the following query: market AND research

Based on the sample query and documents above, which document would you expect to be ranked higher?

Most people would say Document 1 listed above should be ranked higher, and the reason is that users got trained by search engines to expect, among other things, that anything that is found in the title of a document should have more relevance than something found somewhere in the body of the document. This is a very reasonable expectation, because we tend to accept that if someone went through the trouble of choosing specific terms to put in the title of a document, then those terms must be important.

So, depending on your search platform of choice, there are different ways for you to be explicit about what fields should have higher importance.

In cX::search, for example, the modified query would look like this:

?p_aq=query(title^5,tags^3,body:"market research", token-op=and)

The query above is defining that cX::search should:

- look for documents containing the terms market and research;

- these terms must be found in the title, tags or body fields; and, even more importantly

- terms found in the title have 5 times (title^5) more importance than terms found in the body (the default field boost is 1)

- terms found in the tags have 3 times (tags^3) more importance than terms found in the body

In a similar fashion, FAST ESP has the composite-rank piece of the rank profile, which allows you to define how much importance you want to give for each field that is part of a composite field.

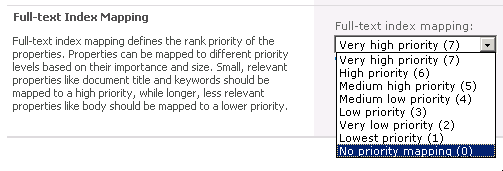

In FAST Search for SharePoint, you also have some options available both through the UI or through PowerShell, which allow you to configure which importance level a managed property should belong to when mapped to a fulltext index, as shown in the screenshot below:

As you can see from the examples above, using field boosts (or any similar feature for the search platform you are using) give you the flexibility to be very precise about which fields matter most according to your specific business rules.

Sorting

The last important piece of this puzzle of configuring basic relevance settings for your search application is to decide how results should be sorted before being returned. This is crucial because, in the end, this is what decides what results will be displayed on top.

Remember the previous example above that used field boosts to define the importance of each field? Well, now take a look at this cX::search request below:

?p_aq=query(title^5,tags^3,body:"market research", token-op=and)&p_sm=publication_date:desc

As you can see above, this query is explicitly requesting that results be sorted by publication_date in descending order. What this means is that any field boosts are completely ignored by the search engine. Yes, they are simply ignored, since we directly requested results to be sorted based on a date field, instead of the default sorting that is based on the ranking score.

Sometimes this is exactly what you want, such as the case when the user has already drilled down to a subset of results and you want to allow him/her to just sort by price or average rating, for example (two options I often use when searching for products at Amazon).

And a last option is the case when you want to mix the two approaches, in a way that you can still use the ranking score, but with extra boosts that take into consideration how recent is a document (or how many units it has sold, or what is its average rating, etc.). Those are more advanced options that we will discuss another day, but for now just keep in mind that yes, that’s also possible 🙂